@guillem First, you are correct the “D” exchange (FINRA ADF) actually aggregates trades from several Trade Reporting Facilities (TRFs). Alpaca doesn’t break out the TRF. The trade_ids are simply offset with a number for each.

I can’t replicate your issue. I fetch all AMZN trades for 2024-04-04 and find no duplicates. How are you fetching trades. Below is my entire code.

import pandas as pd

import requests

# Alpaca API key and Secret key

API_KEY = "xxxx"

SECRET_KEY = "xxxx"

def get_trades(symbols, start, end):

BASE_URL = 'https://data.alpaca.markets'

ENDPOINT = '/v2/stocks/trades'

HEADERS = {'APCA-API-KEY-ID':API_KEY,

'APCA-API-SECRET-KEY':SECRET_KEY,

'Content-Type': 'application/json'

}

params = {'symbols':symbols,

'start':start.isoformat(),

'end':end.isoformat(),

'feed':'sip',

'limit':10000,

'page_token':None,

}

# initialize things

trades = {}

first_pass = True

while first_pass or page_token != None:

first_pass = False

response = requests.get(BASE_URL+ENDPOINT, headers=HEADERS, params=params)

# select trades from response and concatenate to previous trades

json_data = response.json()['trades']

trades = trades | json_data

# check for a next_page_token

page_token = response.json()['next_page_token']

params['page_token'] = page_token

return trades

def convert_json_trades_to_df(json_data, time_zone='America/New_York'):

# map columns

column_names = {'symbol':'symbol',

't':'timestamp',

'p':'price',

's':'size',

'x':'exchange',

'z':'tape',

'i':'index',

'c':'conditions',

}

# add symbol to each json record and convert to dataframe

records = [dict(records, symbol=stock) for stock, recordset in json_data.items() for records in recordset]

df = pd.DataFrame.from_records(records, columns=column_names.keys())

# rename columns, set timezone, set index

df.rename(columns=column_names, inplace=True)

df['timestamp'] = pd.to_datetime(df.timestamp).dt.tz_convert(time_zone)

df.set_index(['symbol', 'timestamp'], inplace=True)

return df

# get trades and convert to dataframe

trades = get_trades(symbols='AMZN',

start=pd.to_datetime('2024-04-04 00:00').tz_localize('America/New_York'),

end=pd.to_datetime('2024-04-04 23:00').tz_localize('America/New_York'),

)

trades_df = convert_json_trades_to_df(trades)

# find duplicates

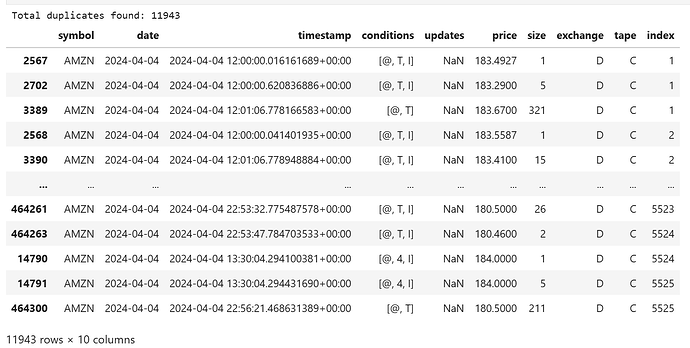

duplicate_index = trades_df.reset_index().duplicated(subset=[ 'symbol','exchange','index'])

duplicates = trades_df.reset_index().loc[duplicate_index]

display(duplicates)

The result for duplicates is an empty dataframe.

What timezone are you specifying? Are you by chance fetching the evening of one day and the morning of the next (because of the timezone)?